Many sources state that with LLM there is no distinction between independent and dependent variables. I think it's still OK to think of IV's (predictors) in relation to a DV, however. In the example below (from the 1993 General Social Survey), the DV is political party identification (collapsed into Democratic [strong, weak], Republican [strong, weak], and Independent ["pure" Independent, Ind. Lean Dem., and Ind. Lean. Repub.]). Predictors are religious affiliation (collapsing everyone other than Protestant and Catholic into "All Other & None"); college degree (yes/no), and gender.

Variables are typically represented by their initial (P, R, C, and G in our example). Further, putting two or more letters together (with no comma) signifies relationships among the respective variables. By convention, one's starting (or baseline) model posits that the DV (in this case, party identification) is unrelated to the three predictors, but the predictors are allowed to relate to each other. The symbolism [P, RCG] describes the hypothesis that one's decision to self-identify as a Democrat, Republican, or Independent (and make one's voting decisions accordingly) is not influenced in any way by one's religious affiliation, attainment (or not) of a college degree, or gender. However, any relationships in the data between predictors are taken into account. Putting the three predictors together (RCG) also allows for three-way relationships or interactions, such as if Catholic females had a high rate of getting bachelor's degrees (which I have no idea if it's true). Three-way interaction terms (e.g., Religion X College X Gender) also include all two-way interactions (RC, RG, CG) contained within.

The orange column in the chart below shows us how many respondents actually appeared in each of the 36 cells representing combinations of political party (3) X religion (3) X college-degree (2) X gender (2).

The next column to the right shows us the expected frequencies generated by the [P, RCG] baseline model. We would not expect this model to do a great job of predicting cell frequencies, because it does not allow Party ID to be predicted by religion, college, or gender. Indeed, the expected frequencies under this model do not match the actual frequencies very well. I have highlighted in purple any cell in which the expected frequency comes within +/- 3 people of the actual frequency (the +/- 3 criterion is arbitrary; I just thought it gives a good feel for how well a given model does). The [P, RCG] model produces only 7 purple cells out of 36 possible. Each model also generates a chi-square value (use the likelihood-ratio version). As a reminder from previous stat classes, chi-square represents discrepancy (O-E) or "badness of fit," so a highly significant chi-square value for a given model signifies poor match to the actual frequencies. Significance levels for each model are indicated in the respective red boxes atop each column (***p < .001, **p < .01, *p < .05).

After running the baseline model and obtaining its chi-square value, we then move on to more complex models that add relationships or linkages between the predictors and DV. The second red column shows expected frequencies for the model [PR, RCG]. This model keeps the previous RCG combination, but now adds a relationship between party (P) and religious (R) affiliation. If there is some relationship between party and religion, such as Protestants being more likely than other religious groups to identify as a Republican, the addition of the PR term will result in a substantial improvement in the match between expected frequencies for this model and the actual frequencies. Indeed, the [PR, RCG] model produces 16 well-fitting (purple) cells, a much better performance than the previous model. (Adding linkages such as PR instead of just P will either improve the fit or leave it the same; it cannot harm fit.)

Let's step back a minute and consider all the elements in the [P, RCG] and [PR, RCG] models:

[P, RCG]: P, RCG, RC, RG, CG, R, C, G

[PR, RCG]: PR, P, RCG, RC, RG, CG, R, C, G

Notice that all the terms in the first model are included within the second model, but the second model has one additional term (PR). The technical term is that the first model is nested within the second. Nestedness is required to conduct some of the statistical comparisons we will discuss later.

If we look at the model [PC, RCG], we see that it contains:

PC, P, RCG, RC, RG, CG, R, C, G

The two models highlighted in yellow are not nested. To go from [PR, RCG] to [PC, RCG], you would have to delete the PR term (because the latter doesn't have PR) and add the PC term. When you have to both add and subtract, two models are not nested.

Let's return to discussing models that allow R, C, and/or G to relate to P. As noted above, adding more linkages will improve the fit between actual and expected frequencies. However, we want to add as few linkages as possible in order to keep the model as simple or parsimonious as possible.

The next model in the above chart is [PC, RCG], which allows college-degree status (but no other variables) predict party ID. There's not much extra bang (9 purple cells) for the buck (using PC instead of just P). The next model [PG, RCG], which specifies gender as the sole predictor of party ID, yields 11 purple cells. If you could only have one predictor relate to party ID, the choice would be religion (16 purple cells).

We're not so limited, however. We can allow two or even all three predictors to relate to party ID. The fifth red column presents [PRC, RCG], which allows religion, college-degree, and the two combined to predict party ID. Perhaps being a college-educated Catholic disproportionately is associated with identifying as a Democrat (again, I don't know if this is actually true). As with all the previous models, the RCG term allows all the predictors to relate to each other. As it turns out, [PRC, RCG] is the best model of all the ones tested, yielding 18 purple cells. The other two-predictor models, [PRG, RCG] and [PCG, RCG], don't do quite as well.

The final model, on the far right (spatially, not politically) is known as [PRCG]. It allows religion, college-degree, and gender -- individually and in combination -- to predict party ID. In this sense, it's a four-way interaction. As noted, a given interaction includes all lower-order terms, so [PRCG] also includes PRC, PRG, PCG, RCG, PR, PC, PG, RC, RG, RC, P, R, G, and G. Inclusion of all possible terms, as is the case here, is known as a saturated model. A saturated model will yield estimated frequencies that match perfectly the actual frequencies. It's no great accomplishment; it's a mathematical necessity. (Saturation and perfect fit also feature prominently in the next course in our statistical sequence, Structural Equation Modeling.)

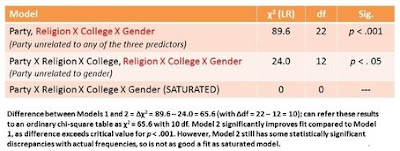

Ideally, among the models tested, at least one non-saturated model will show a non-significant chi-square (badness of fit) on its own. That didn't happen in the present set of models, but the model I characterized above as the best [PRC, RCG] is "only" significant at p < .05, compared to p < .001 for all the other non-saturated models. Also, as shown in the following table, [PRC, RCG] fits significantly better than the baseline [P, RCG] by what is known as the delta chi-square test. Models must be nested within each other for such a test to be permissible. (For computing degrees of freedom, see Knoke & Burke, 1980, Log-Linear Models, Sage, pp. 36-37.)

When you tell SPSS to run the saturated model, it automatically gives you a supplemental backward-elimination analysis, which is described here. This is another way to help decide which model best approximates the actual frequencies.

My colleagues and I used log-linear modeling in one of our articles:

Fitzpatrick, J., Sharp, E. A., & Reifman, A. (2009). Midlife singles’ willingness to date partners with heterogeneous characteristics. Family Relations, 58 , 121–133.

Finally, we have a song:

Log-Linear Models

Lyrics by Alan Reifman

May be sung to the tune of “I Think We’re Alone Now” (Ritchie Cordell; performed by Tommy James and others)

Below, Dr. Reifman chats with Tommy James, who performed at the 2013 South Plains Fair and was kind enough to stick around and visit with fans and sign autographs. Dr. Reifman tells Tommy about how he (Dr. Reifman) has written statistical lyrics to Tommy's songs for teaching purposes.

Chi-square, two-way, is what we're used, to analyzing,

But, what if you've, say, three or four nominal variables?

Reading all the stat books that you can, seeking out what you can understand,

Trying to find techniques, specifically for, multi-way categorical data,

And you finally find a page, and there it says:

Log-linear models,

You try to re-create, the known frequencies,

Log-linear models,

You try to use as few, hypothesized links,

Each step of the way, you let it use associations,

You build an array, until the point of saturation,

Reading all the stat books that you can, seeking out what you can understand,

Trying to find techniques, specifically for, multi-way categorical data,

And you finally find a page, and there it says:

Log-linear models,

You try to re-create, the known frequencies,

Log-linear models,

You try to use as few, hypothesized links,

Log-linear models,

You try to re-create, the known frequencies,

Log-linear models,

You try to use as few, hypothesized links,

Log-linear models,

You try to re-create, the known frequencies,

Log-linear models,

You try to use as few, hypothesized links,

You try to re-create, the known frequencies,

Log-linear models,

You try to use as few, hypothesized links,

Log-linear models,

You try to re-create, the known frequencies,

Log-linear models,

You try to use as few, hypothesized links,

Log-linear models,

You try to re-create, the known frequencies,

Log-linear models,

You try to use as few, hypothesized links,

You try to re-create, the known frequencies,

Log-linear models,

You try to use as few, hypothesized links,